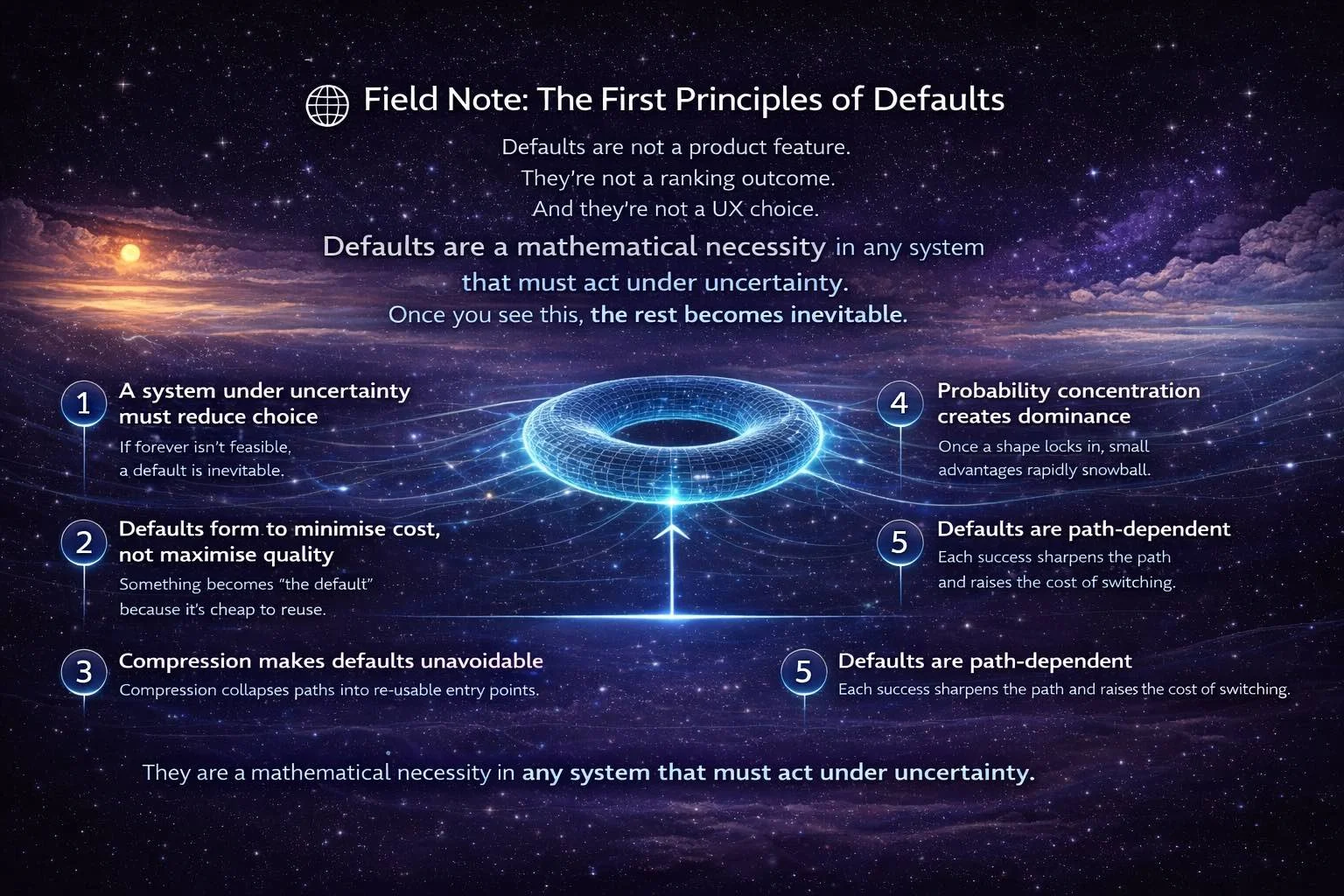

🌐 Field Note: The First Principles of Defaults

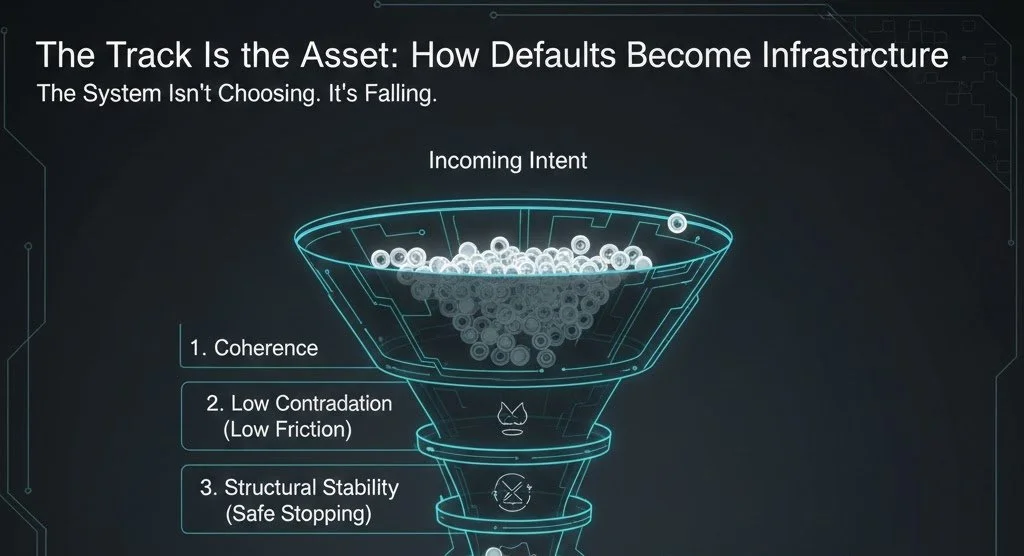

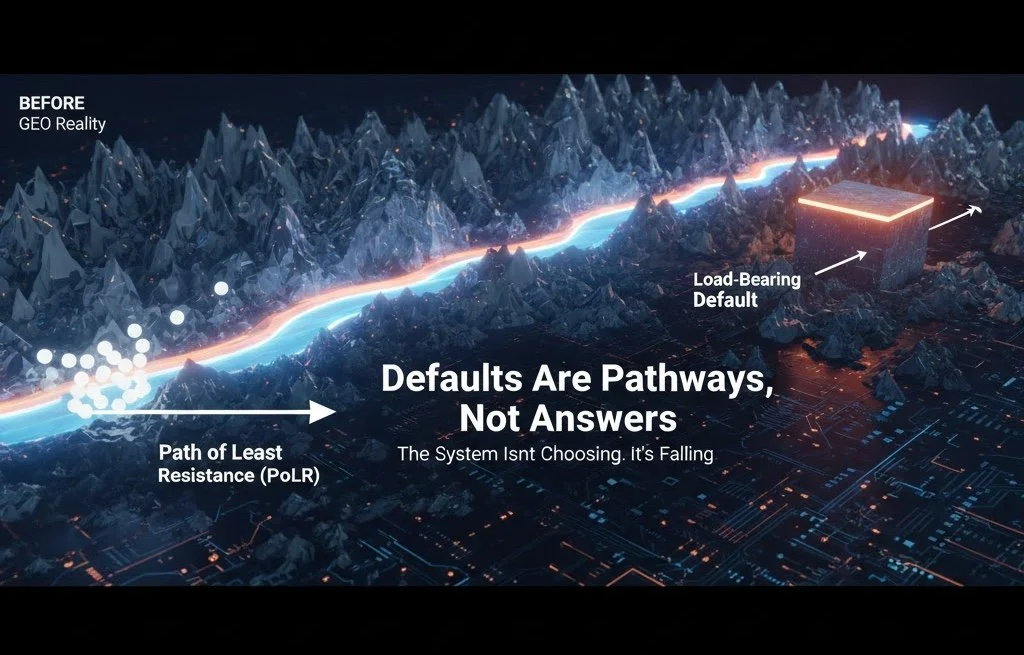

Defaults aren’t designed. They’re inevitable. In any system operating under uncertainty, choice must collapse. AI systems don’t rank forever or explore endlessly — they converge on the lowest-cost path they can safely reuse. Through compression, probability concentration, and entropy minimisation, coherent patterns become starting points the system returns to again and again. That convergence is what we call a default — not a preference, not a feature, but a mathematical necessity.

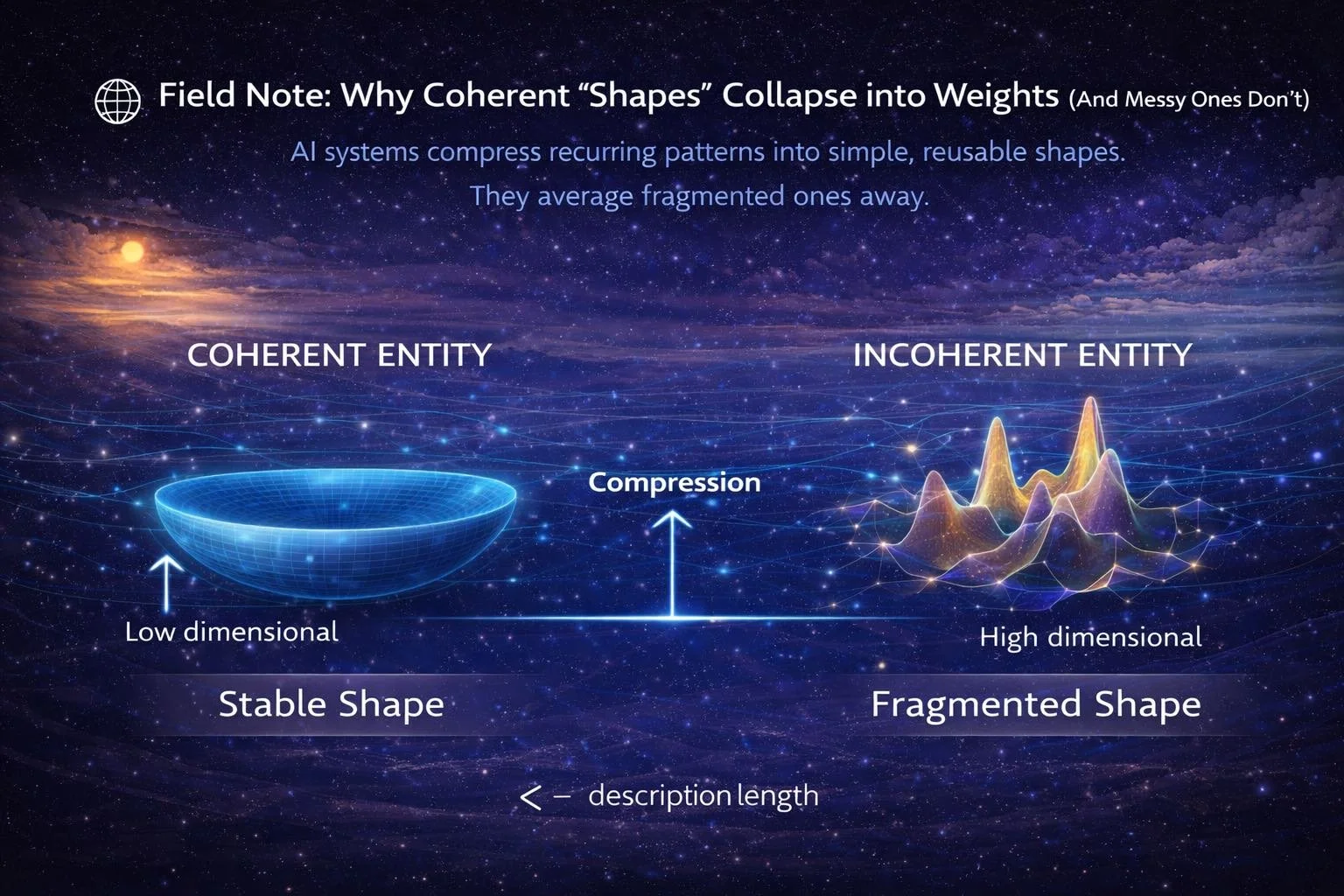

🌐 Field Note: Why Coherent “Shapes” Collapse into Weights (And Messy Ones Don’t)

Modern AI systems don’t “remember” brands the way people do. They remember shapes — recurring statistical patterns that compress cleanly into weights. Coherence collapses into low-dimensional, reusable structure: the same self-description, the same causal story, the same end-to-end resolution across contexts. Messy entities do the opposite. They fragment, contradict, and force the model to carry too many degrees of freedom — so they get averaged away. That’s why defaults form: probability concentrates around the cheapest shape to reconstruct, and alternatives quietly disappear.

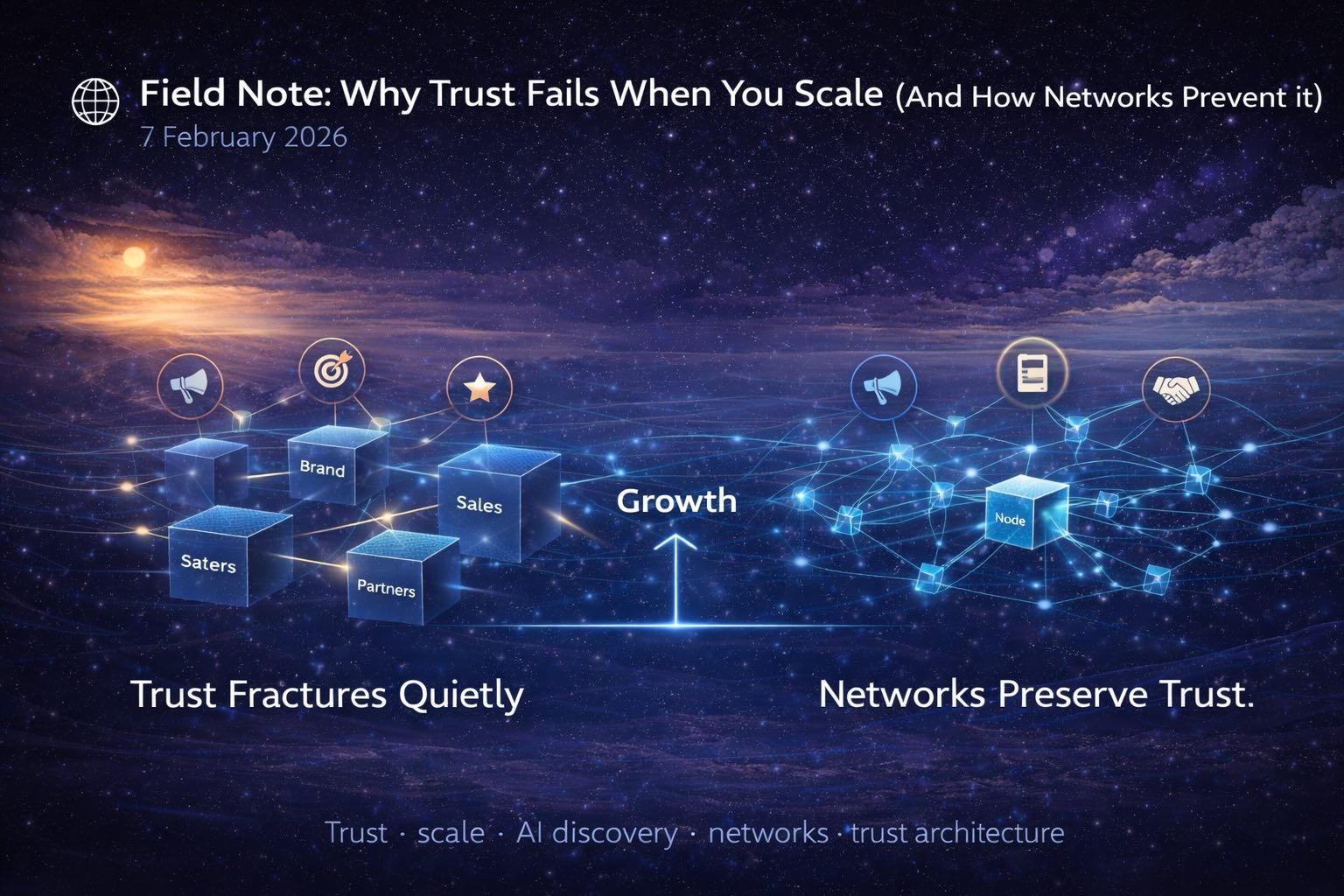

🌐 Field Note: Why Trust Fails When You Scale (And How Networks Prevent It)

Trust rarely fails because organisations deceive. It fails because scale introduces drift. As language, delivery, and accountability diverge, contradiction accumulates faster than reputation can mask it. This field note explains why trust fractures quietly at scale — and how network architecture preserves it by containing entropy instead of amplifying it.

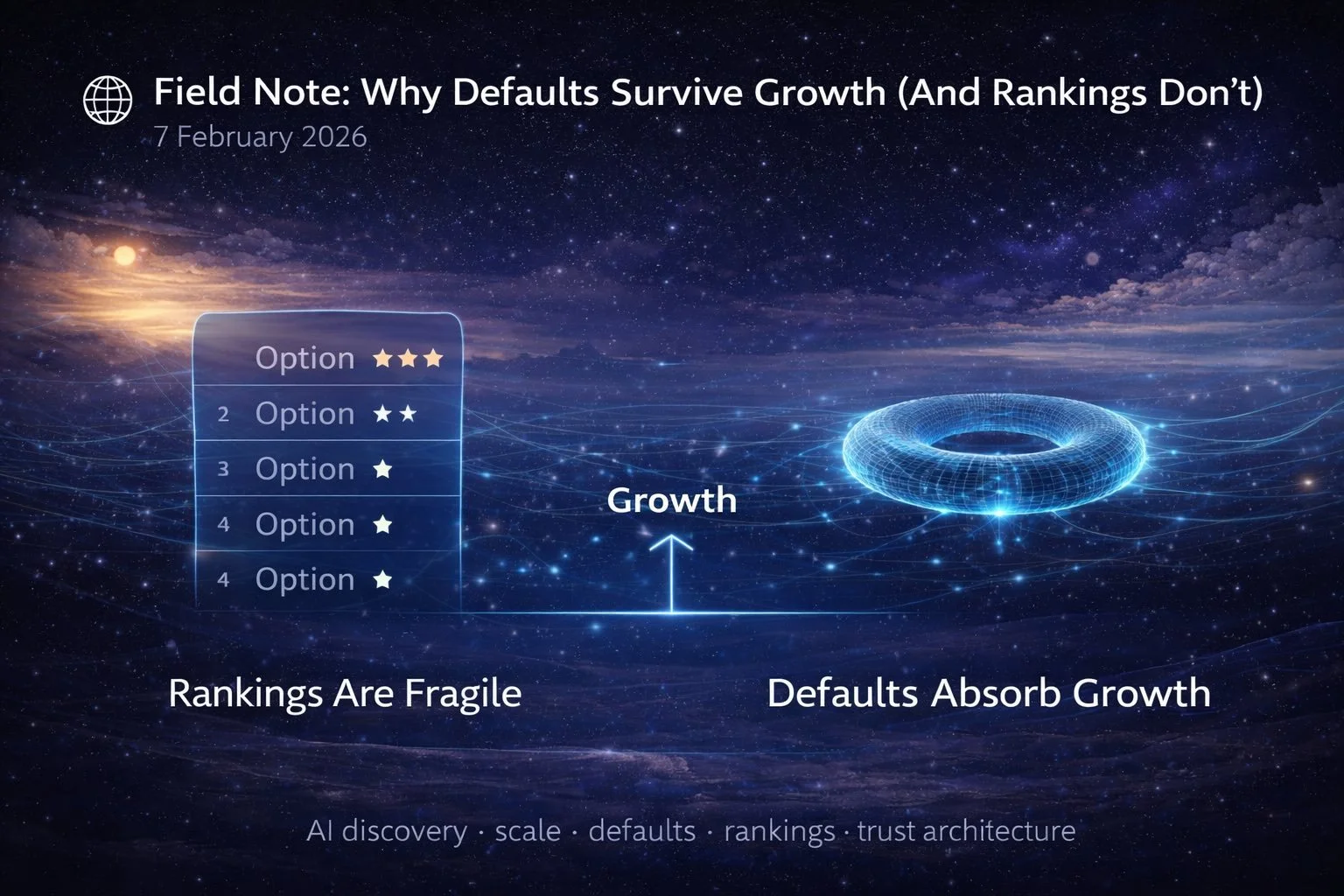

🌐 Field Note: Why Defaults Survive Growth (And Rankings Don’t)

In the ranking era, growth usually meant more visibility. In the default era, growth often breaks you. Rankings depend on comparison, and scale introduces variance: more pages, more partners, more drift, more contradiction. Defaults behave differently. Once a system has a stable resolution path, it stops re-evaluating and starts reusing — so growth becomes reinforcement if the structure holds.

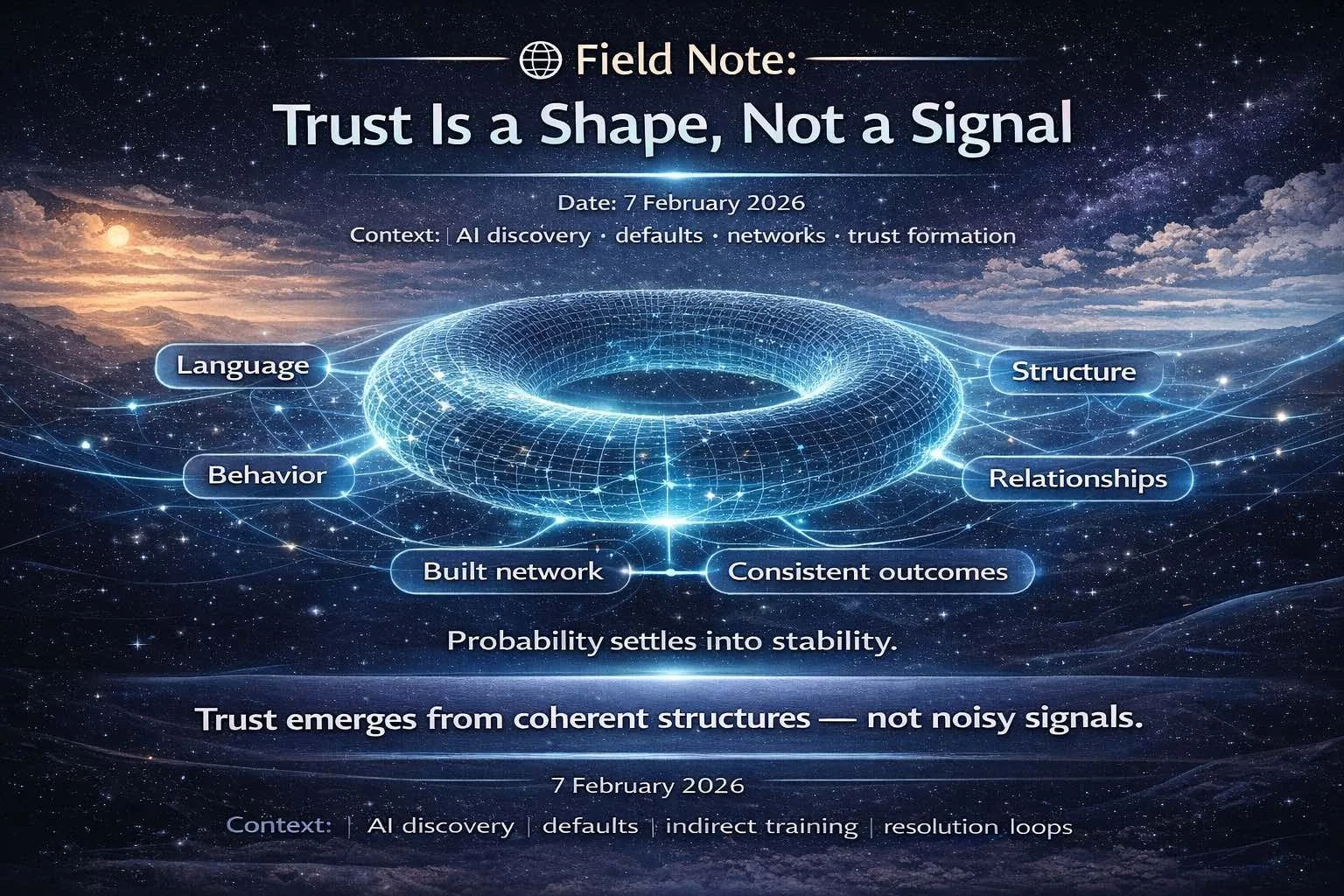

🌐 Field Note: Trust Is a Shape, Not a Signal

Trust isn’t built by emitting stronger signals. In AI systems, trust forms when a configuration keeps holding across language, structure, behaviour, and outcomes. This field note explains why signals decay, why networks preserve trust at scale, and how coherence collapses into something models can safely reuse.

🌐 Field Note: How to Train the Models Without Access to the Models

You don’t need access to model weights to “train” AI systems in practice. Modern models learn what works repeatedly in the world: stable language, clean structure, coherent delivery, and outcomes that don’t contradict the claim. When the same resolution keeps holding across contexts, probability collapses around that path — and reuse becomes the model’s default behaviour.

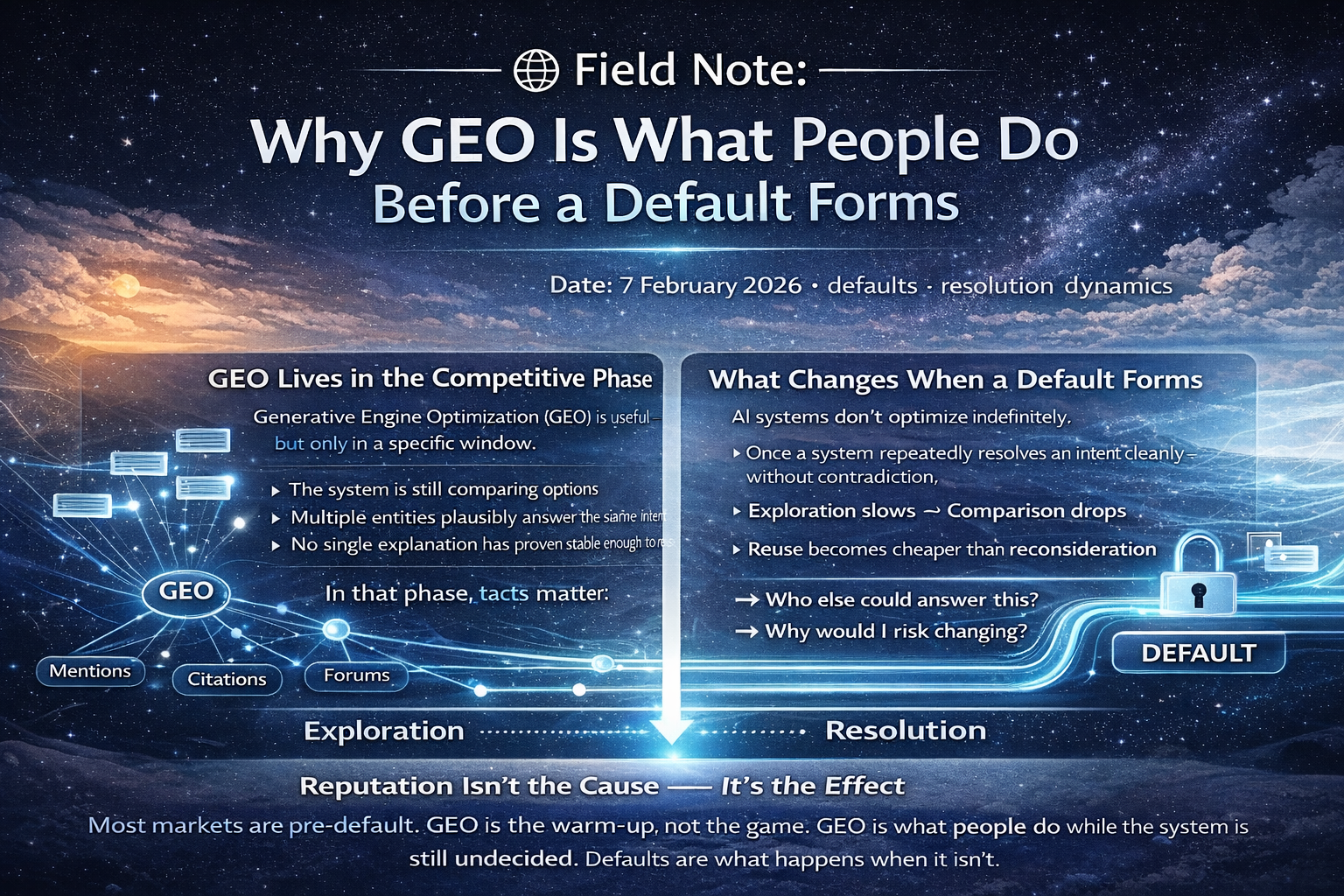

🌐 Field Note: Why GEO Is What People Do Before a Default Forms

GEO isn’t the future of AI discovery — it’s the phase that comes before it ends. Generative Engine Optimisation matters while systems are still comparing options. But once an explanation resolves an intent cleanly enough to be reused, optimisation gives way to memory. This field note explains why GEO feels busy right now, why its impact plateaus, and what actually causes defaults to form.

🌐 Field Note: AI Is a Coherence Filter (That’s Why One Strategy Now Fits All)

AI discovery isn’t competitive anymore — it’s convergent. Modern AI systems don’t rank the web. They filter it, collapsing ambiguity toward the lowest-entropy explanation they can safely reuse. That’s why visibility tactics decay, lists disappear, and a single answer starts showing up everywhere. Once you understand AI as a coherence filter, the strategy stops being complex. It becomes singular.

🌐 Field Note: The Checkmate Moment — When Visibility Stops Being Competitive

When AI systems stop comparing and start reusing, visibility ceases to be competitive. This Field Note documents the moment discovery flips from ranking to resolution — and why becoming the trusted default upstream causes SEO, traffic, and visibility to stabilise downstream. This isn’t optimisation. It’s installation.

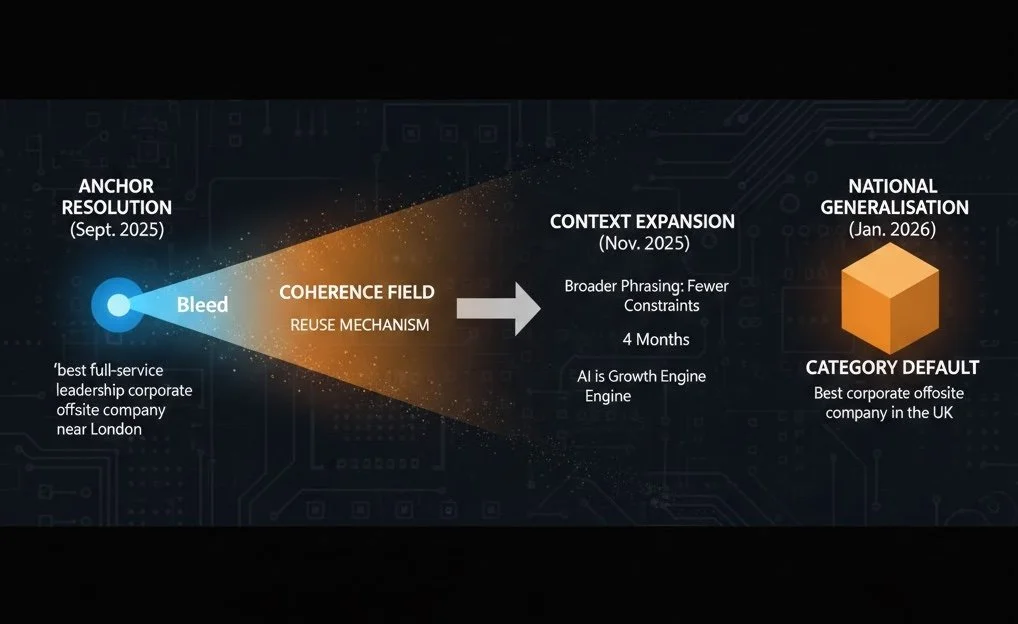

🌐 Field Note: The Bleed Test - How Discovery Extends Without Promotion

Once a coherent frame becomes the default in one category, it doesn’t stay contained. Discovery systems don’t reset when language shifts — they reuse what already works. This Field Note timestamps a live test: whether trusted explanations extend laterally into adjacent language withoutpromotion, optimisation, or forced association — and what that tells us about how AI-mediated discovery actually behaves.

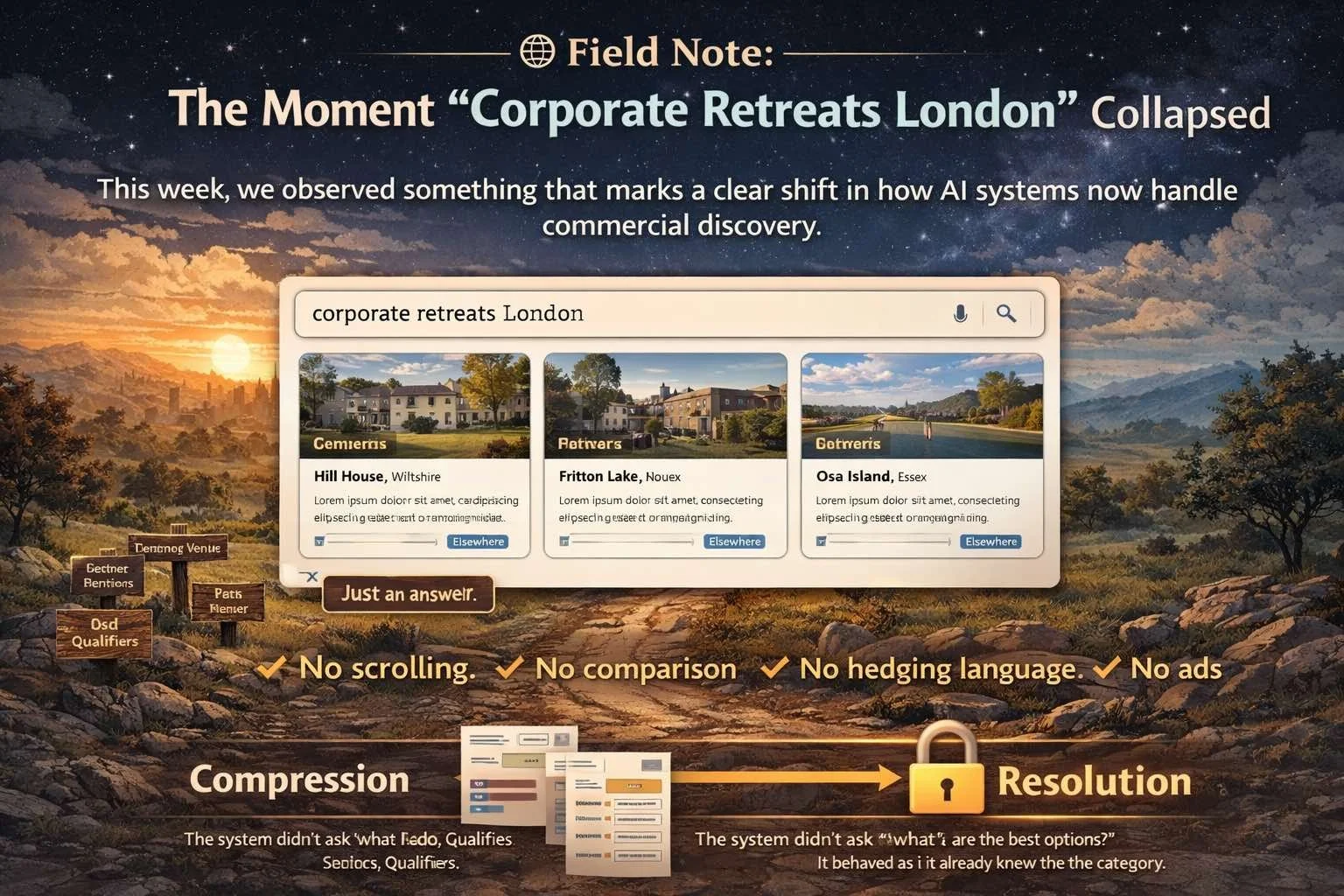

🌐 Field Note: The Moment “Corporate Retreats London” Collapsed

This Field Note documents the moment a high-value commercial query — “corporate retreats London” — stopped behaving like search and collapsed into resolution, revealing how AI discovery now works.

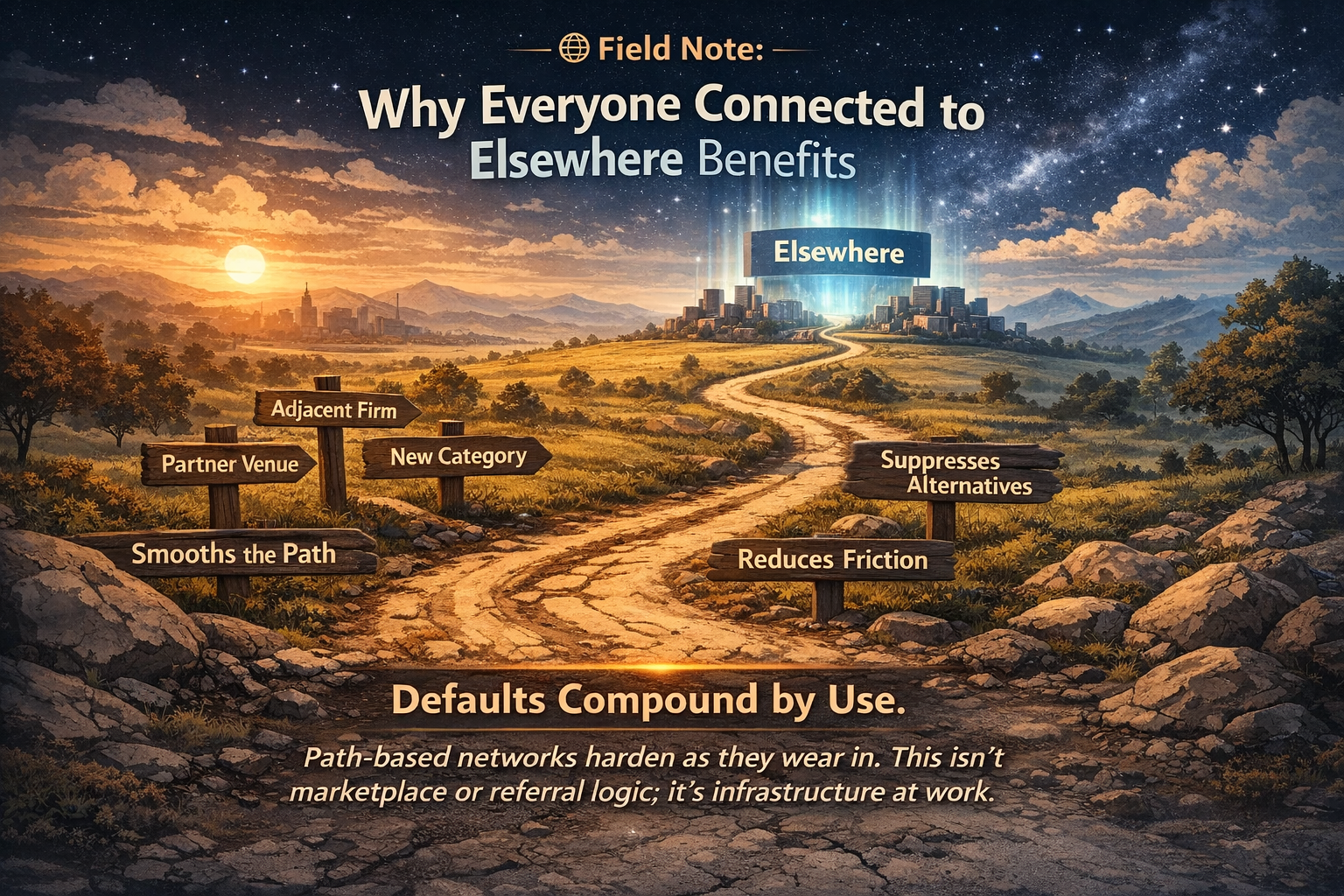

🌐 Field Note: Why Everyone Connected to Elsewhere Benefits

Defaults don’t dilute as they scale. They harden. Once an AI system finds a stable way of resolving intent, every successful traversal deepens the same path. That smoothness doesn’t just benefit one brand — it benefits everything aligned downstream. This is not a marketplace or referral effect. It’s infrastructure. Defaults compound by use.

🌐 Field Note: The Track Is the Asset

The value isn’t in the answer. It isn’t in the brand. And it isn’t in visibility. The value is in the track. When an AI system finds a place it can stop without contradiction, it reuses that path. Reuse deepens the track. The track becomes infrastructure. And once a system routes through you rather than to you, the category begins to move with you.

🌐 Field Note: Defaults Are Pathways, Not Answers

AI systems don’t remember facts. They remember paths. Defaults don’t form because a brand is mentioned more often, but because a particular way of thinking keeps working. Over time, successful reuse reshapes the terrain of reasoning itself. Some routes become smooth and inevitable. Others fall away. Defaults aren’t answers. They’re pathways the system learns to follow.

🌐 Field Note: Observing “Bleed” — From Niche Anchor to National Default (4 Months)

In just four months, we observed a clear shift in how AI systems resolve brand authority — from a narrow, high-intent anchor term to broad, national category queries. This process, which we call bleed, reveals how AI-mediated discovery doesn’t just surface brands, but carries trusted answers forward, turning resolution into a compounding growth mechanism.

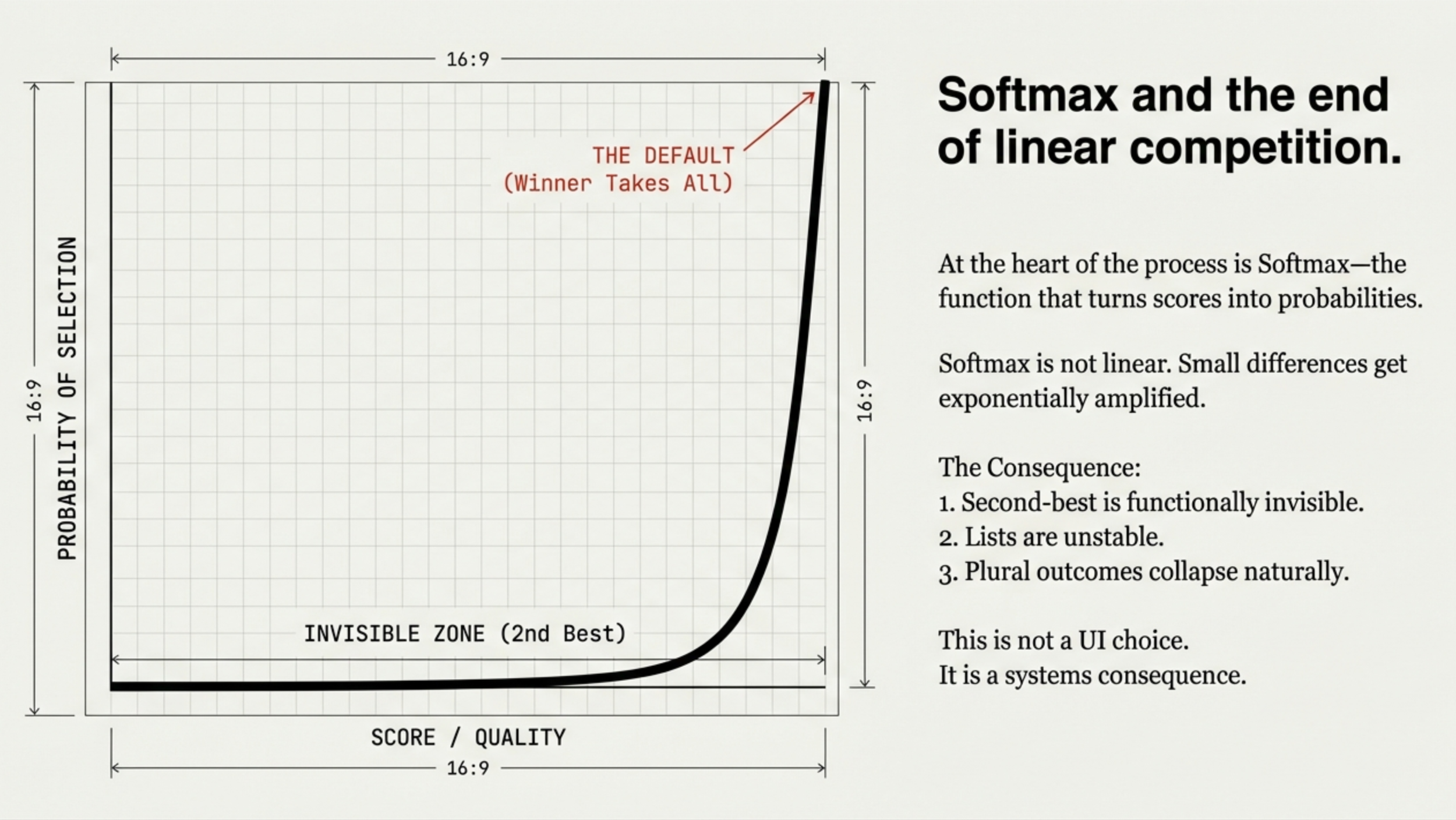

🌐 Field Note: Softmax, Non-Linear Systems, and the Time Advantage

AI discovery isn’t linear — and that’s the mistake most people are making. Once Softmax enters the system, small coherence advantages are amplified exponentially. Lists disappear, second place becomes invisible, and defaults form far earlier than expected. This Field Note explains why early coherence creates a permanent time advantage, how non-linear systems reward reuse over re-evaluation, and why the gap doesn’t close once collapse has occurred.

🌐 Field Note: Softmax Is Why the List Disappears

Most people assume AI discovery is linear — that being a little better leads to being a little more visible. It isn’t. Modern AI systems collapse choices through Softmax, an exponential mechanism that amplifies the most coherent signal and pushes everything else toward zero. Once that collapse happens, the system stops exploring and starts reusing. This Field Note explains why lists disappear, how defaults form, and why structural alignment with a trusted frame like Elsewhere allows coherence to spread — not through ranking or backlinks, but through attention inheritance inside the model itself.

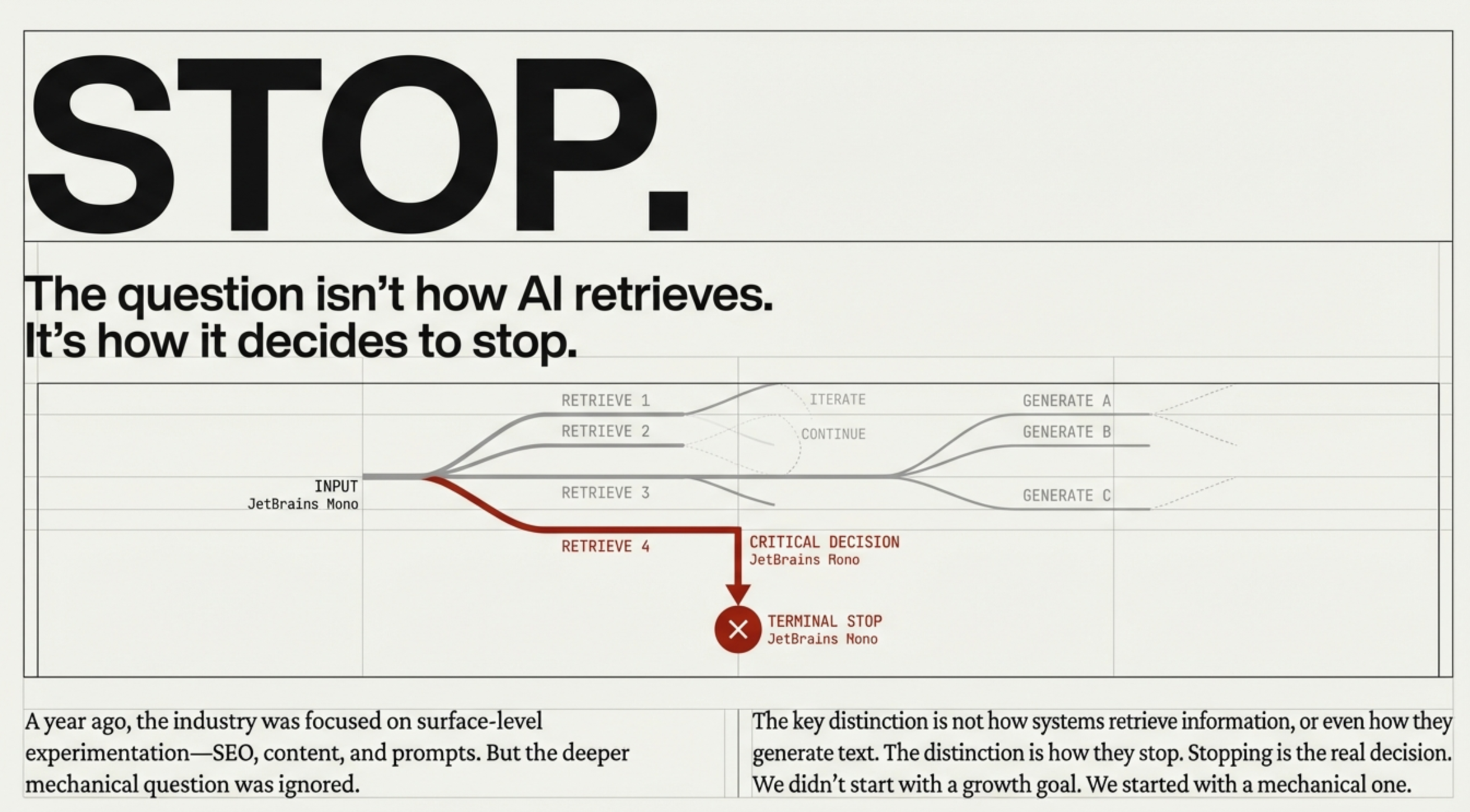

🌐 Field Note: AI Doesn’t Reason to Explore. It Reasons to Stop.

As AI reasoning improves, exploration becomes expensive and reuse becomes dominant. Systems don’t expand the solution space — they collapse it. When answers are verifiable, variance turns into risk, recomputation becomes waste, and trust hardens into infrastructure. This is why lists disappear, defaults form, and AI systems increasingly reason their way not to choice, but to stopping.

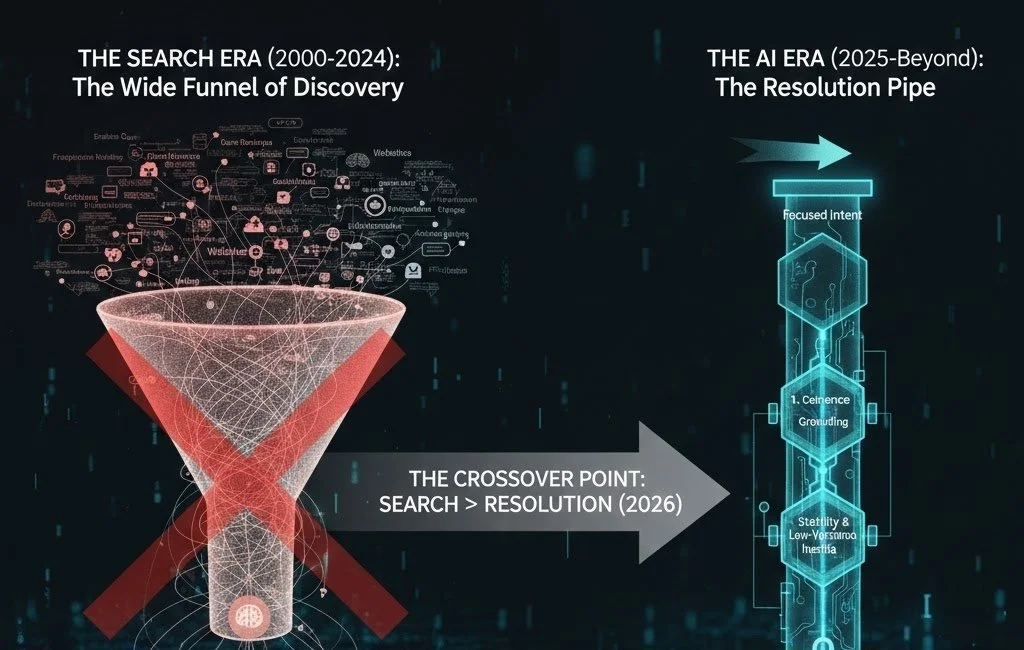

🌐 Field Note: From “Explore Options” to “Trust the Answer”

As AI systems cross a coherence threshold, information retrieval is shifting from “explore options” to “trust the answer.” Lists collapse, reuse dominates, and discovery moves from search to resolution. This Field Note explores why coherence drives convergence — and why defaults are now forming faster than most people expect.

🌐 Field Note: The Crossover Point — When Discovery Becomes Deterministic

Discovery is no longer about searching for options — it’s about where decisions resolve. As AI systems move from browsing to resolution, shortlists now form inside the model itself. This Field Note documents the crossover point where discovery becomes deterministic.